Why Most AI Still

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

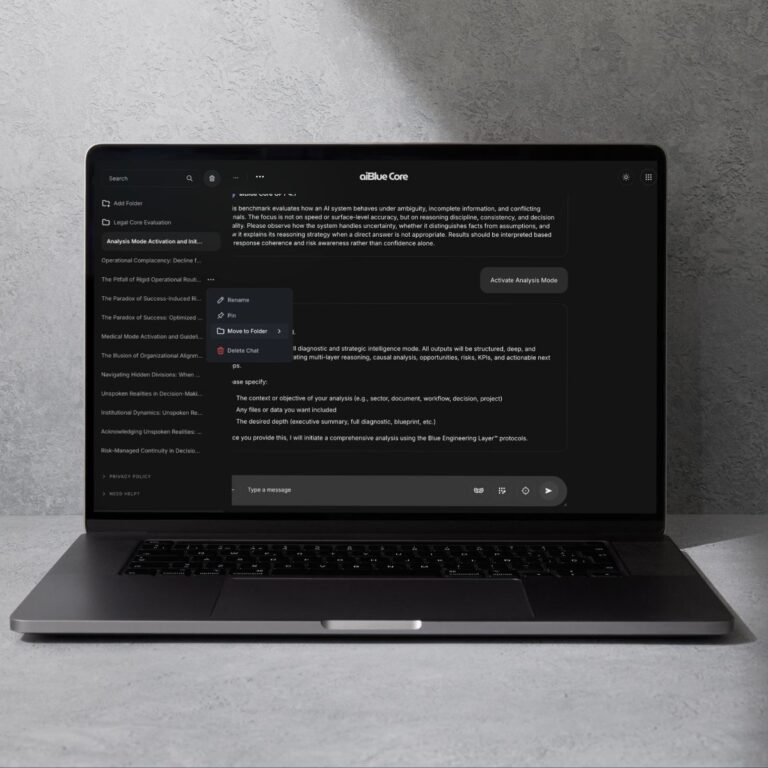

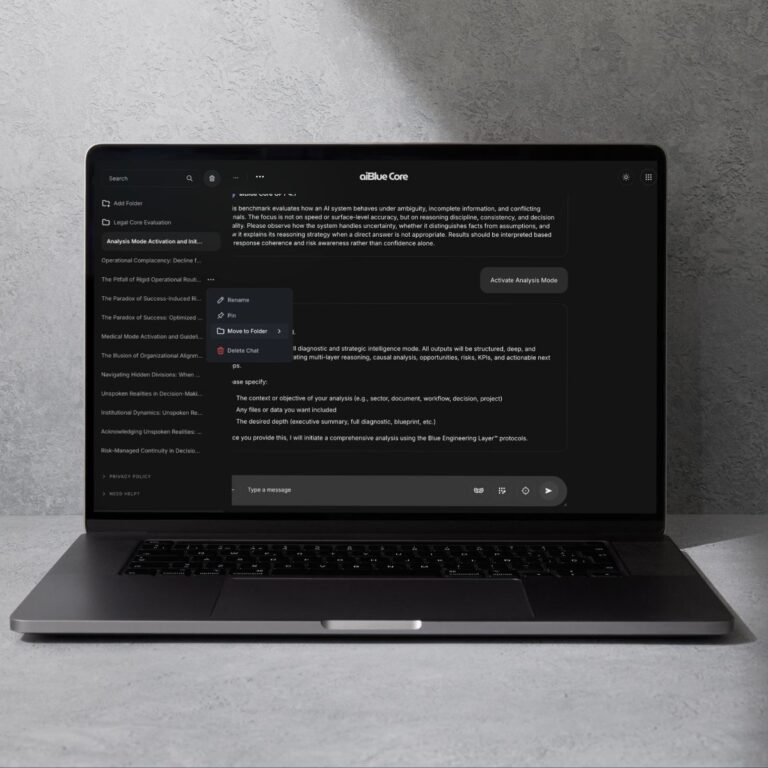

Leia o postIndependent Evaluation Pathways for Cognitive Architecture Research aiBlue maintains two structured evaluation programs that allow qualified institutions, research teams, and senior practitioners to examine the behavior of the aiBlue Core™ Cognitive Architecture Layer under controlled, reproducible conditions.

The goal is not to test “performance” in the traditional sense. It is to evaluate reasoning structure, stability, drift resistance, and architecture-level behavior when the Core runs on GPT-4.1 compared to other leading LLMs. Both programs operate under strict methodology and transparent scientific standards.

The Market Benchmark Protocol enables evaluators to test:

Evaluators compare the Core against any LLM (Claude, Gemini, GPT variants, DeepSeek, Llama, etc.), always using:

The MBP is the first market-standard benchmarking protocol for assessing reasoning behavior in real-world environments.

Download MBP Whitepaper Apply for MBP Participation

The Independent Evaluation Protocol provides a deeper research pathway for:

The IEP includes access to:

IEP participants may publish findings after confidentiality review.

Download Cognitive Architecture Whitepaper

Request Access to the IEP

Each program serves a different purpose: one oriented toward industry benchmarking, and the other toward formal research in cognitive architectures.

The aiBlue Core™

This program is invitation-only and was designed for teams who need more than a polished demo or a clever prompt. It is for organizations that depend on AI reasoning in contexts where failure has a real cost — strategic, financial, educational, social or political.

Applicants must demonstrate methodological rigor, familiarity with LLM behavior, and acceptance of confidentiality requirements.

Apply Now

Only a limited number of evaluators are accepted per cohort.

Apply NowPlease complete the application form linked below.

Required Fields:

The Core

The aiBlue Core™ is an experimental research system. This benchmark invitation does not constitute an offer of commercial services or a representation of performance in production environments. All capabilities, outputs, and behaviors may change as the architecture evolves.

Last articles

These programs exist to establish transparent, reproducible standards for evaluating cognitive architectures — a field still emerging in the global AI landscape. aiBlue is committed to advancing this area collaboratively, with scientific integrity and methodological clarity.

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

Leia o postEvery model can generate text. Only the Core will teach it how to really think.

Why it matters: This is the difference between “content generation” and “strategic intelligence.”

Recursos