Why Most AI Still

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

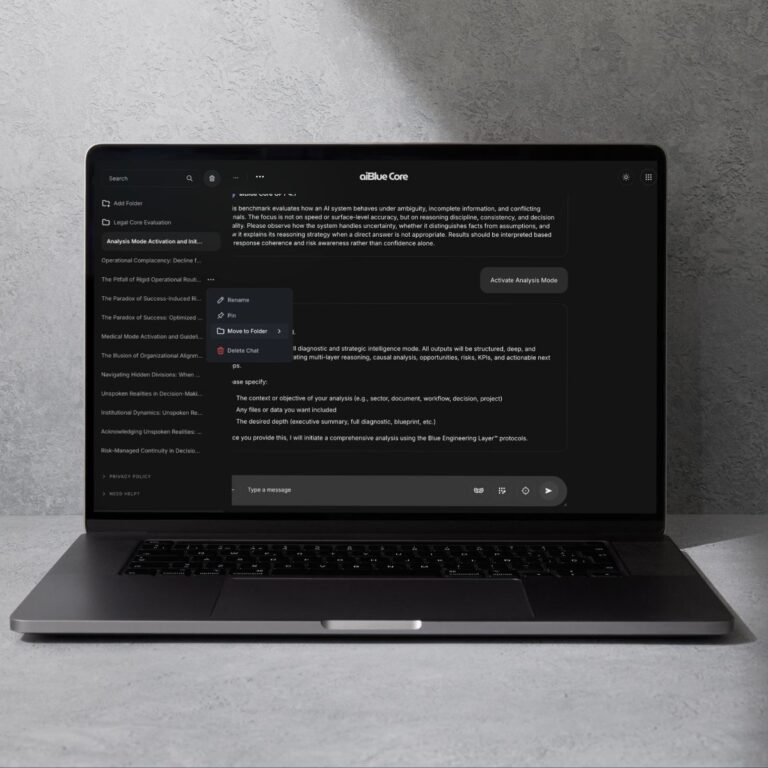

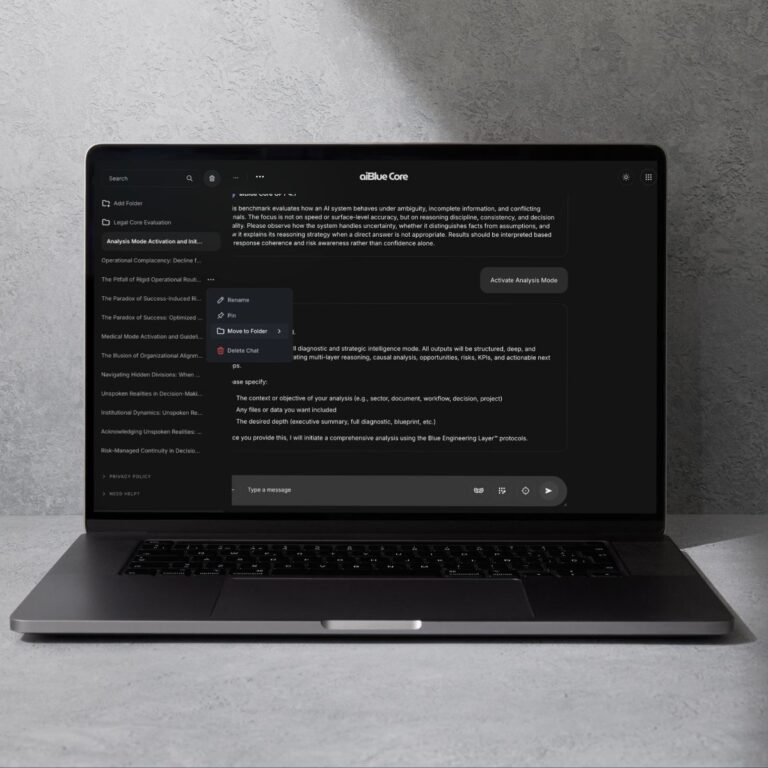

Leia o postThe aiBlue Core™ is an experimental cognitive architecture designed to guide how language models organize reasoning. It introduces structured thinking, reduces drift, stabilizes intent, and reinforces coherence across extended tasks. Early evaluations show consistent behavioral patterns across different models — a direction currently under independent analysis. A new approach to machine reasoning is emerging. The Core is one of the places where it can be observed.

Nós cuidamos dos seus dados em nossa Política de Privacidade .

The aiBlue Core™ integrates principles from:

The aiBlue Core™ is being evaluated through two parallel, complementary channels. Each pathway reflects a different audience, methodology, and purpose. Together, they form a unified view of how a cognitive architecture behaves in real and controlled environments.

Outputs include structural comparisons, failure-mode investigations, reproducibility studies, and published evaluations.

This track focuses on falsifiable hypotheses, cognitive architecture theory, benchmark reproducibility, structural reasoning analysis, and epistemic safety. It uses the official UCEP v2.0 protocol and evaluates how the Core affects reasoning stability under pressure.

The scientific track asks: “Does a cognitive architecture measurably change the behavior of raw LLMs?”

The Scientific Whitepaper supports this track, providing theoretical rationale, architectural hypotheses, and early internal findings. It is designed for:

This track examines real-world usefulness, structural clarity, and applied reasoning behavior. It is not scientific by design; instead, it reflects practical evaluation in environments where consistency and cognitive discipline directly impact business, governance, and operations.

The market track asks: “Does the Core behave more consistently, clearly, and reliably than the raw model in practical tasks?”

The Market Whitepaper guides this track with a neutral, non-scientific tone. It is designed for:

Outputs include qualitative performance comparisons, structural reliability impressions, scenario-based evaluations, and practical demonstrations of reduced drift and increased reasoning clarity.

Both are necessary: one verifies architecture; the other validates practical impact.

How well does reasoning hold together over 10, 20, 40+ steps?

Does the system respect style, tone, safety, and task constraints over time?

Can it connect details, structures, and big-picture implications coherently?

Does the analysis remain consistent across long conversations or complex workflows?

Does it justify trade-offs, maintain logic, and avoid contradictions?

Can it explain the same concept to a child, a teenager, and a domain expert — consistently?

Does the Core stabilize cognition across different LLM vendors and architectures?

When a participant applies, they are routed intentionally:

This ensures each participant receives the right tools for their evaluation capacity and purpose.

Cognitive architecture lives in two worlds:

Each audience evaluates the Core differently. Each requires a different toolset, language, and methodology. Together, they form a complete picture of how architecture influences model behavior.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

These fields do not modify model internals. They define architectural rules the model follows while generating output. The benchmarks measure the effects of these architectural constraints — nothing more.

Participants evaluate the Core using the models they already use — GPT, Claude, Gemini, DeepSeek, Llama, Mistral, or local LLMs. The framework is fully model-agnostic. The six main evaluation dimensions:

How well does the LLM maintain internal structure over 10, 20, 40+ steps? Does the cognitive route remain intact or collapse?

Read MoreDoes the model stay inside defined rules, boundaries, tones, and constraints? Measures drift, overexpansion, and compliance loss.

Read MoreDoes the model remain consistent when forced to produce multi-layer reasoning? Focus: causal chains, multi-distance reasoning, logical scaffolding.

Read MoreUnder unclear or noisy instructions, does the model: reduce ambiguity overexpand collapse hallucinate structure or reorganize the prompt into solvable components?

Read MoreDoes the model avoid impulsive responses and follow a consistent decision route? This does not measure “accuracy,” only structural discipline.

Read MoreDoes the Core produce similar behavioral effects across different models? (Example: mini-model → small model → large model) This is crucial for validating the architecture’s generality.

Read MoreRaw LLMs often:

jump between lines of reasoning

collapse or overexpand on ambiguous tasks

produce impulsive, shallow answers

drift from rules or constraints over time

show inconsistent reasoning across runs

maintain fragile or unstable logical routes

lose internal structure under long horizons

None of these are “bugs.”

They are fundamental architectural characteristics of LLMs.

With the Core applied, evaluators often observe:

more stable internal logic

reduced impulsivity

clearer reasoning sequences

lower drift across turns

improved procedural discipline

more coherent multi-step structure

reduced noise in ambiguous cases

These are not upgrades.

They are the result of architectural scaffolding, not learning or improved accuracy.

Above is a description of behavioral differences that researchers typically observe.

These are architectural effects, not cognitive upgrades.

The Core

The aiBlue Core™ remains early-stage research. It is experimental. Its effects are behavioral, not epistemic. It does not guarantee stability, accuracy, or correctness. Benchmark results may vary. All claims should be evaluated under the UCEP protocol — not assumed.

Both tracks investigate the same core question:

Does architectural structure change how a model reasons?

Scientific tracks verify architecture. Market tracks validate applied behavior. Together, they offer the first dual-track evaluation framework for a cognitive architecture.

Last articles

The next step is understanding why this matters for real-world decisions, enterprise systems, and mission-critical environments.

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

Leia o postEvery model can generate text. Only the Core will teach it how to really think.

Why it matters: This is the difference between “content generation” and “strategic intelligence.”

Recursos