Why Most AI Still

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

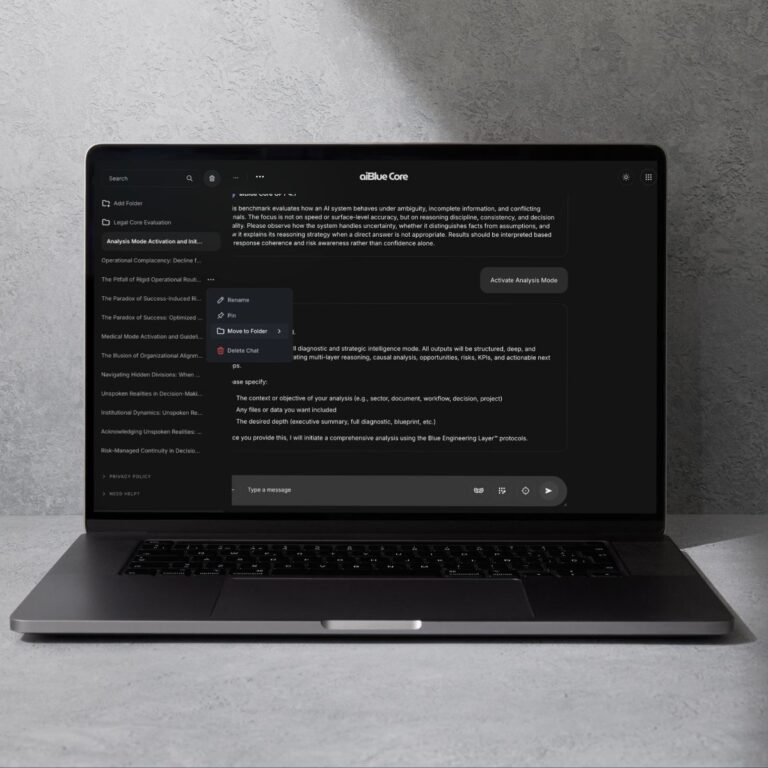

Leia o postTurn unpredictable AI outputs into structured, reliable, enterprise-grade reasoning.

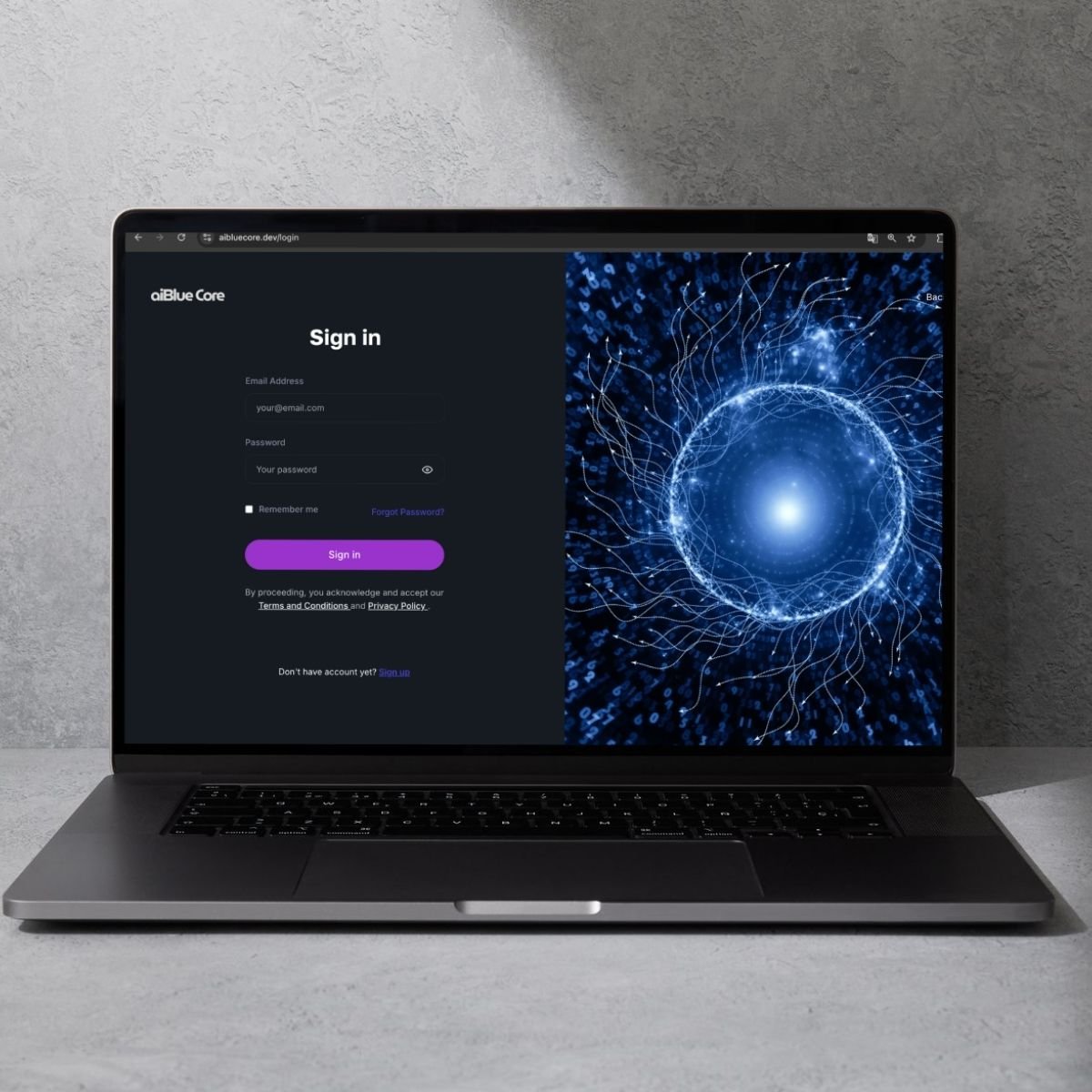

For the first time, we are opening the underlying principles, mechanisms, and experiments that shape the aiBlue Core™. This site serves as the public window into the principles and empirical foundations behind an emerging architecture.

Read the Whitepaper

Today, most enterprises are deploying AI tools without a reasoning control layer. The result isn’t lack of speed. It’s lack of predictability.

But it isn’t controlled. Inside enterprises today: Outputs vary by user Reasoning lacks structure Governance is unclear Hallucination risk is unmanaged AI usage scales without cognitive discipline

Speed without control creates operational risk.aiBlue Core™ introduces a structured reasoning framework that sits on top of existing LLMs and enforces: Context discipline Structured reasoning paths Benchmark-based validation Output consistency Governance alignment This is not another chatbot.

This is reasoning control infrastructure.

If AI decisions matter in your organization, control matters.

ExploreDeployed inside your environment Calibrated via controlled benchmark protocol Validated through stress testing Activated in enterprise mode No public deployment. No open usage. Strict governance.

Request AccessPredictable reasoning outputs Reduced hallucination exposure Replicable AI behavior across teams Structured executive responses Controlled scaling of AI adoption From experimentation to controlled enterprise execution.

Request Access30-day controlled benchmark Token-based participation Enterprise licensing upon approval

Request Access

The base model (small or large) generates raw semantic material. This is where fingerprints of the underlying LLM become observable.

A structured chain-of-thought framework that removes ambiguity, constrains noise, and defines the mental route for the model.

The universal logic layer that governs coherence, direction, structure, compliance, and longitudinal reasoning across all models.

It sits on top of existing large language models and enforces structured reasoning, context discipline, and benchmark-based validation.

Welcome to The Cognitive Architecture Era

Wilson Monteiro

Founder & CEO aiBlue LabsFAQ

Doubts?

No. Prompt engineering only modifies instructions. The aiBlue Core™ modifies the internal reasoning structure of the model — including constraint application, coherence enforcement, and logical scaffolding — in ways that prompts cannot replicate or sustain.

No. A wrapper affects only the interface or how data is passed to and from the model. The Core works at the cognition layer, shaping how the model reasons, not how responses are packaged.

No. A chatbot layer governs user interaction. The Core governs the model’s internal reasoning architecture independent of any UI or front-end layer.

No. The Core does not alter, fine-tune, or retrain any model weights. It overlays logical structure and constraints while preserving the model’s identity and fingerprint.

No. The Assistant is only a transport layer for tokens. The Core is portable and environment-independent — meaning it would behave the same under any compatible LLM API.

Because small-model testing isolates the effect of the cognitive architecture. When a model has fewer parameters, any improvement in reasoning, structure, stability, or constraint-obedience cannot be attributed to “raw model power” — only to the architecture itself. After validating the architecture in this controlled environment, the Core is then applied to larger models, where the gains become even more visible. The Core is model-agnostic, but starting with smaller models makes the scientific signal easier to measure.

Because the Core is not a model. It doesn’t modify parameters or weights. It enhances reasoning while allowing intrinsic limitations (like embedding constraints or tokenization limits) to remain visible.

Through fingerprint-based validation: run stress tests on a base model (e.g., GPT-4.1 mini) without the Core, then with the Core. The model’s fingerprint remains the same, but reasoning discipline and coherence improve. Prompts cannot achieve this combination of preserved identity + enhanced reasoning.

The Core is designed to be model-agnostic. However, empirical validation is currently limited to GPT-4.1 mini and advancing to GPT 4.1. Testing on larger models is part of the upcoming evaluation roadmap.

The aiBlue Core™ belongs to Cognitive Architecture Engineering (CAE), an emerging field focused on reasoning protocols, constraint-based logic, semantic stability, and longitudinal coherence on top of foundational models. It is not prompt engineering, not fine-tuning, and not an interface layer.

Updates

The aiBlue Core™ is now entering external evaluation. Researchers, institutions, and senior strategists can apply to participate in the Independent Evaluation Protocol (IEP) — a rigorous, model-agnostic framework designed for transparent benchmarking. This is a collaborative discovery phase, not commercialization. All participation occurs under NDA and formal protocols.

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

Leia o postEvery model can generate text. Only the Core will teach it how to really think.

Why it matters: This is the difference between “content generation” and “strategic intelligence.”

Recursos