Why Most AI Still

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

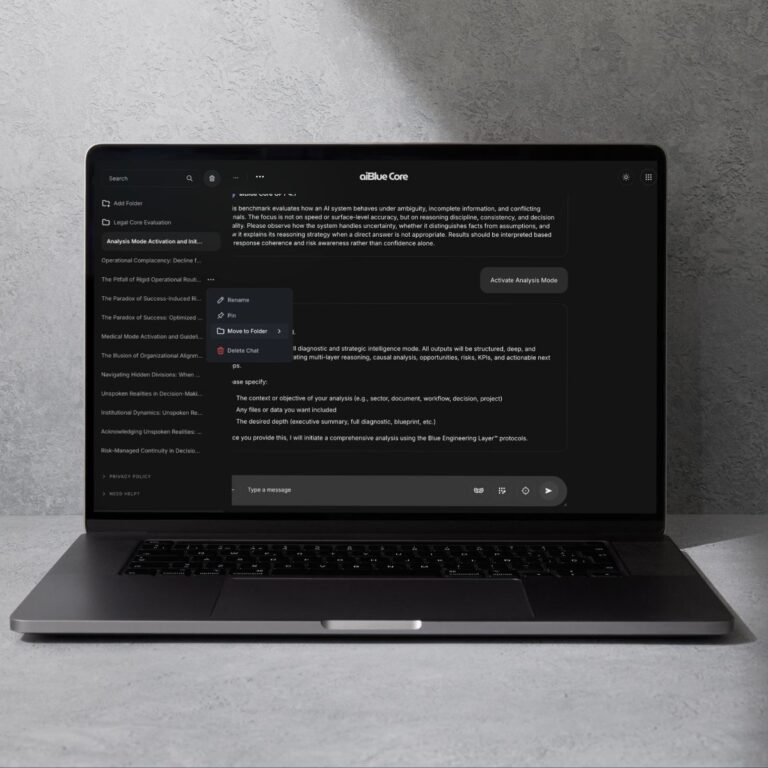

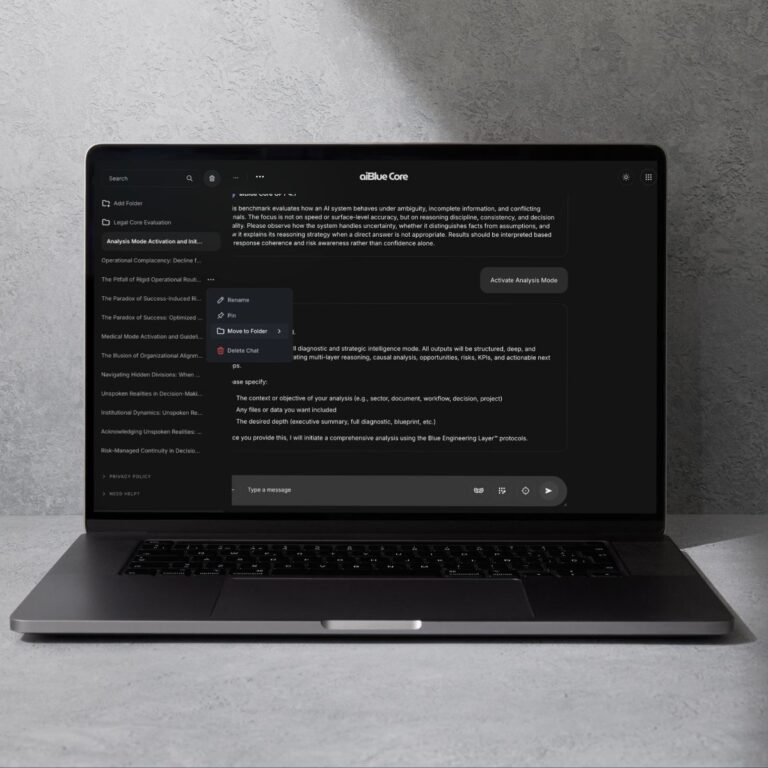

Leia o postThe aiBlue Core™ is not a product. It is a research-grade Cognitive Architecture Layer designed to sit above any large-language model (LLM) and shape how reasoning unfolds — bringing structure, coherence, and long-horizon stability into systems that were never built to think. Our goal is simple and ambitious: Move beyond raw probabilistic generation and build an infrastructure that organizes thought, not just words.

This page presents the scientific rationale, architectural principles, and early-stage research foundations of the aiBlue Core™. We disclose what is known, what is being tested, and what remains exploratory — always with transparency and epistemic discipline.

Stable categories, semantic constraints, and cognitive scaffolding.

This discipline shapes conceptual boundaries:

Raw LLMs operate linguistically; neuro-symbolic structures give them conceptual geometry.

Long-horizon task discipline and procedural governance.

This discipline creates:

It prevents impulsive output and organizes the model around deliberate cognitive steps.

Internal consistency checks without revealing chain-of-thought.

This discipline enables:

It is not chain-of-thought exposure — it is chain-of-thought governance.

This is not a failure of intelligence. It is a failure of architecture. LLMs are pattern predictors — not reasoning engines.

LLMs often drift away from initial instructions over time because they prioritize linguistic fluency over strict procedural compliance.

Models can solve individual steps but frequently lose logical continuity across a chain of thought, producing contradictions or broken causal links.

Over extended interactions, LLMs progressively degrade in focus, allowing noise, reinterpretation, or goal-shift to distort the task.

Constraints that are respected in the first response are often violated in subsequent replies as the model “forgets” or reinterprets the boundaries.

LLMs tend to explain, reinterpret, negotiate, or justify instructions instead of executing them — creating reasoning loops not requested by the user.

When exposed to contradictory, manipulative, or structurally confusing prompts, most models lose format, consistency, and logical integrity.

The aiBlue Core™ introduces a strategic governance layer that can significantly influence the behavior of any model. Below is a benchmark-grade comparison showing the contrast.

The Cognitive Delta™

Baseline Model without Cognitive Scaffolding Raw LLMs tend to:

LLMs can generate statements that sound correct but are factually wrong. This happens because their goal is to produce the most probable continuation — not to verify truth or accuracy.

Read MoreWhen information is missing, a raw LLM will often “guess” by fabricating details that fit the context. It fills gaps with plausible fiction because it does not have a mechanism for acknowledging uncertainty.

Read MoreLLMs follow prompts literally, even when the instruction is unclear, unsafe, or misaligned with the user’s intent. They do not evaluate why they are being asked something — only how to complete the request.

Read MoreBecause they lack a stable internal framework for decision-making, raw LLMs can be manipulated into producing harmful or unintended outputs using cleverly designed prompts.

Read MoreRaw LLMs have no built-in structure for ethics, coherence, or strategic alignment. They cannot track intention, values, context, or consequences across multiple steps of reasoning.

Read MoreA raw model answers immediately based on surface-level patterns. It does not pause to analyze, organize, or evaluate the deeper structure of a problem.

Read MoreLLMs generate answers without considering the second- or third-order effects of their suggestions. They do not naturally think in terms of causal chains or long-term impact.

Read MoreRaw LLMs produce inconsistent output depending on phrasing, order of the questions, context window, or noise. They do not maintain an internal architecture of thought across interactions.

Read MoreRaw LLMs struggle to maintain stable context over long interactions. They can lose track of earlier details, shift interpretations unexpectedly, or misremember previous steps because they do not possess an internal “state model” of the conversation.

Read MoreThe Cognitive Delta™

With the Core, the AI no longer gives quick, shallow answers. it provides structured reasoning without exposing internal chain-of-thought.

Download WhitepaperThe Core slows down the model’s reactive behavior. It forces the AI to structure thought before producing output, creating clarity, coherence, and depth in situations where raw LLMs rush.

Download WhitepaperInstead of linear responses, the Core generates multi-layered cognition: diagnosis → causality → strategy → risks → extensions. This is closer to how senior consultants and complex decision-makers think.

ExploreThe Core trains models to detect weak points, inconsistencies, and possible failure modes before making recommendations — a capacity absent in raw LLM behavior.

Download WhitepaperCore-enhanced cognition reveals what is missing: uncertainties, biases, incomplete information, or mistaken assumptions. This creates a safer and more reliable thinking process.

Download WhitepaperThe Core transforms vague or noisy questions into clear, structured, and solvable problems. It reduces cognitive noise and elevates signal quality.

Download WhitepaperInstead of blindly obeying prompts, the Core evaluates context, intention, and potential harm. It refuses unrealistic or unsafe reasoning patterns and redirects toward responsible alternatives.

Download WhitepaperCore-enhanced models simulate downstream effects: “What happens if this decision is taken?” They evaluate second- and third-order outcomes — a type of reasoning raw LLMs do not naturally perform

Download WhitepaperWith the Core, the AI sustains a stable conceptual map throughout the interaction. It preserves intentions, constraints, and previous reasoning steps, creating long-range coherence and eliminating the drift commonly seen in raw LLMs.

Download Whitepaper

The Underlying Science of the aiBlue Core™. The Core operates through three high-level cognitive disciplines: Neuro-Symbolic Structuring (stable categories and constraints), Agentic Orchestration (long-horizon task discipline), and Chain-of-Verification (internal consistency checks without revealing chain-of-thought). These mechanisms shape reasoning behavior without altering the underlying model.

The Core enforces a disciplined thinking sequence during the model’s reasoning process (without modifying the model itself).:

This anchors the model in clarity before it generates a single sentence.

Instead of answering “top-down” or “bottom-up” at random, the Core builds multi-level reasoning:

It forces the model to examine the problem from several cognitive distances.

Raw language models drift easily — losing the thread of a question, shifting context, or responding to irrelevant fragments. The Core applies continuous semantic constraints that:

It acts as an internal compass, keeping the model anchored even in complex or ambiguous conversations.

Raw models tend to jump straight to an answer. The Core inserts a layer of decision hygiene that slows this impulse and improves the quality of reasoning. Before committing to a response, the Core:

This produces answers that are cleaner, safer, and easier to trust in real decision-making contexts.

Raw models often respond with emotional reactivity, overconfidence, or oversimplification. The Core tempers these tendencies by guiding the model toward a more grounded cognitive posture. It encourages responses that are:

This does not make the model human; it simply makes its behavior less primitive and more aligned with mature, responsible reasoning.

The Core gives the model a mental framework that guides how thoughts are formed and sequenced. Instead of generating text as a stream of predictions, the model begins to organize its reasoning around stable cognitive scaffolds. This reduces random jumps, enforces logical order, and ensures that every response has a coherent internal architecture.

At this level, the Core enables the model to move beyond surface pattern-matching and into real problem decomposition. Instead of treating a prompt as flat text, the model starts to break it into factors, causes, relationships, and trade-offs. This allows it to analyze situations as systems, not just as sentences.

Here, the Core teaches the model to monitor its own reasoning while generating it. The model begins checking for coherence, alignment with the question, and internal consistency as the answer unfolds. If it detects gaps, contradictions, or drift, it adjusts its path instead of continuing blindly.

At this highest tier, the Core enables the model to hold multiple perspectives simultaneously. It interprets problems not as isolated variables, but as interconnected fields involving systems, values, motives, constraints, risks, and outcomes. This multidimensional thinking allows the model to navigate complexity with clarity and precision.

Without the Core, achieving reliable high-level reasoning requires extensive prompt engineering, manual corrections, and continuous oversight. The Core bypasses that overhead entirely. It delivers structured, decision-ready thinking immediately, with predictable logic patterns, reduced randomness, and more interpretable outputs. This dramatically lowers the operational and cognitive workload for users.

The Core may elevate the model’s ability to identify patterns, absorb structure, and refine reasoning from each interaction. Instead of treating prompts as isolated events, the model demonstrates more consistent behavior across turns, giving the appearance of internal continuity.— recognizing recurring themes, stabilizing preferred reasoning pathways, and improving the accuracy of future responses. This results in faster adaptation, better retention of context, and more intelligent evolution of behavior over time.

With the Core, the model doesn’t just repeat information — it organizes it. Each new piece of data is anchored to a logical framework, enabling deeper conceptual connections and more stable knowledge organization. Learning becomes less about memorizing text and more about integrating meaning, relationships, and patterns. This dramatically reduces cognitive fragmentation and improves the model’s ability to generalize with precision.

The Core

aiBlue Core™ remains in early-stage research and development. The architecture is experimental. None of the features described on this site guarantee performance or production readiness. All results and capabilities described are subject to change as testing proceeds. Any public or private use of the Core — or claims regarding its maturity — must respect this status. This page serves as a scientific disclosure, not as marketing for a product. Raw LLMs generate language. Core-enhanced AI generates structured, strategic reasoning. The difference is measurable, observable, and replicable across any underlying model.

See how the Core changes reasoning quality under pressure, ambiguity, and adversarial conditions.

Last articles

The next step is understanding why this matters for real-world decisions, enterprise systems, and mission-critical environments.

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

Leia o postEvery model can generate text. Only the Core will teach it how to really think.

Why it matters: This is the difference between “content generation” and “strategic intelligence.”

Recursos