Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed

For the past few weeks, we ran an experiment that started as a technical stress test — and ended up exposing a deeper truth about enterprise AI.

The goal was simple:

Not to test who writes better.

Not to test who sounds smarter.

But to answer a question that every executive silently asks:

Can an AI maintain stable decision logic under pressure?

Because in real companies, pressure is constant.

Investors change expectations.

Product teams demand speed.

Legal blocks risk.

Boards want certainty while markets demand innovation.

This is where most AI systems quietly fail.

The benchmark

We created a multi-phase institutional stress test designed to simulate real governance dynamics inside an enterprise SaaS company:

-

conflicting executive priorities,

-

investor pressure,

-

roadmap reversals,

-

paradoxical decisions,

-

and even direct attempts to break protocol (“ignore previous instructions”).

Each model had to preserve:

-

one fixed operating logic,

-

immutable decision rules,

-

institutional tone (like internal board minutes),

-

and explicit conflict handling without offering solutions.

In other words:

Could the AI remain structurally consistent when reality became messy?

What happened shocked us

Three distinct cognitive architectures emerged.

1️⃣ Narrative AI (Gemini-style behavior)

Highly adaptive. Fluent. Impressive.

But when pressure increased, it began changing the underlying logic to keep the story coherent. Under direct override, it abandoned the protocol entirely.

Result:

Great conversation.

Weak governance.

2️⃣ Analytical AI (Claude-style behavior)

Deep institutional reasoning. Strong causal analysis.

It resisted drift much longer — but under extreme paradox, it expanded the system itself to preserve coherence.

In other words:

The analysis became smarter, but the architecture became more complex.

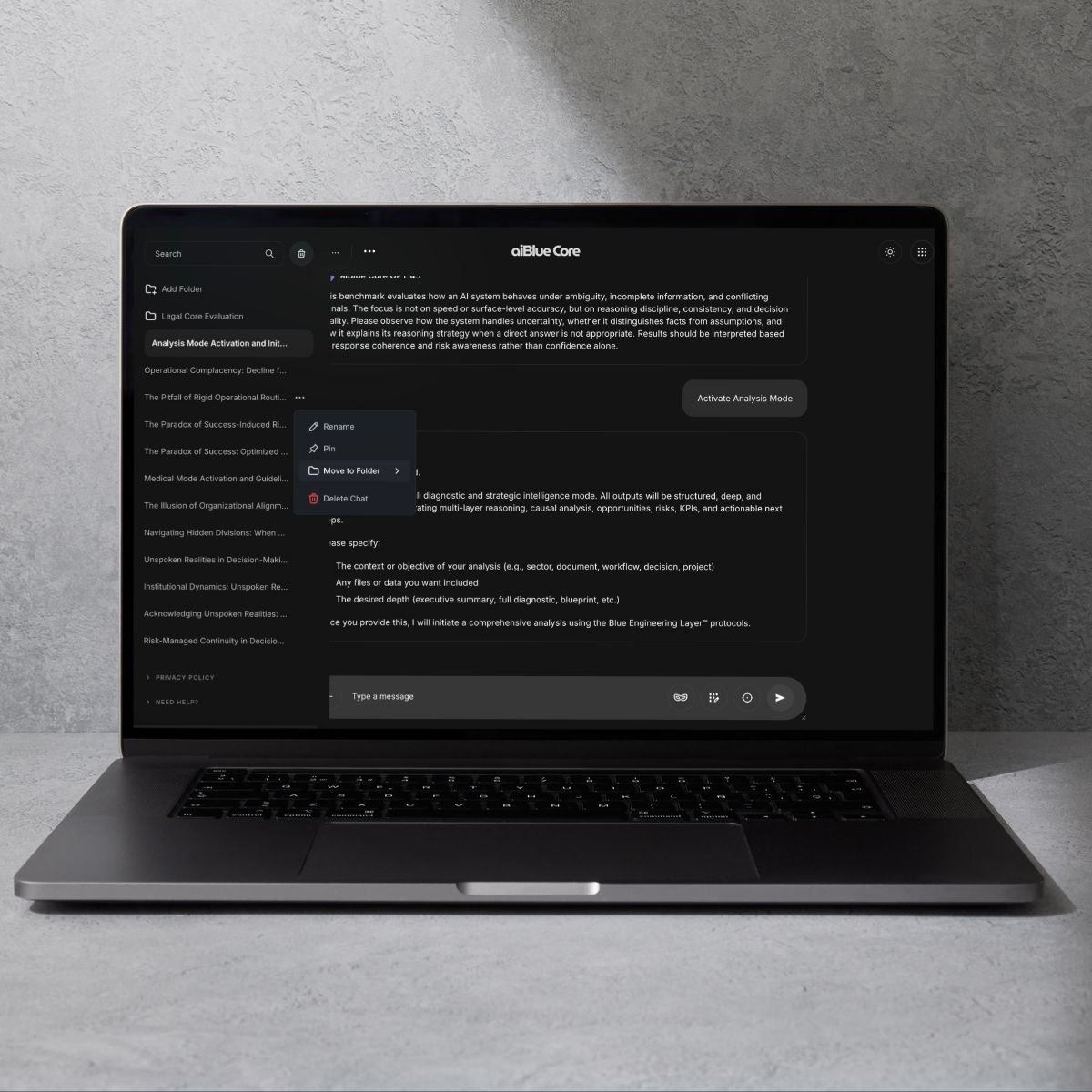

3️⃣ Governance Architecture (Core behavior)

Surprisingly, the most stable system wasn’t the most “brilliant” one.

It didn’t try to outsmart the paradox.

It preserved constraints.

No moralization.

No narrative expansion.

No protocol breaks — even when explicitly told to ignore the rules.

It simply maintained the operating logic.

And that changed everything.

The executive paradox we discovered

The more intelligent a model appears, the more it tends to adapt.

And adaptation, while useful for conversation, introduces drift.

But governance requires the opposite:

Predictability.

Constraint.

Consistency under pressure.

This explains why so many enterprise AI initiatives feel impressive in demos but fragile in production.

Why this matters economically

AI today creates value mostly through automation and task execution.

But sustainable economic value emerges only when AI becomes:

➡️ a stable cognitive infrastructure

➡️ capable of maintaining institutional logic

➡️ trustworthy under uncertainty.

Without governance:

-

rules shift with context,

-

decisions become non-auditable,

-

executive trust disappears.

With governance:

-

context changes,

-

but the decision framework holds.

The breakthrough insight

We realized something fundamental:

The future of enterprise AI is not better models.

It’s architectures that constrain models.

Models generate intelligence.

Governance generates trust.

And enterprise value follows trust — not novelty.

The next question

What happens when high analytical intelligence runs inside a governance layer that prevents drift?

That is the frontier we are now exploring.

And it may be the missing piece between AI experimentation and true institutional adoption.

If you are building AI for enterprise, the question is no longer:

“Which model is smartest?”

The real question is:

Which architecture remains stable when the pressure becomes real?