Why Most AI Still

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

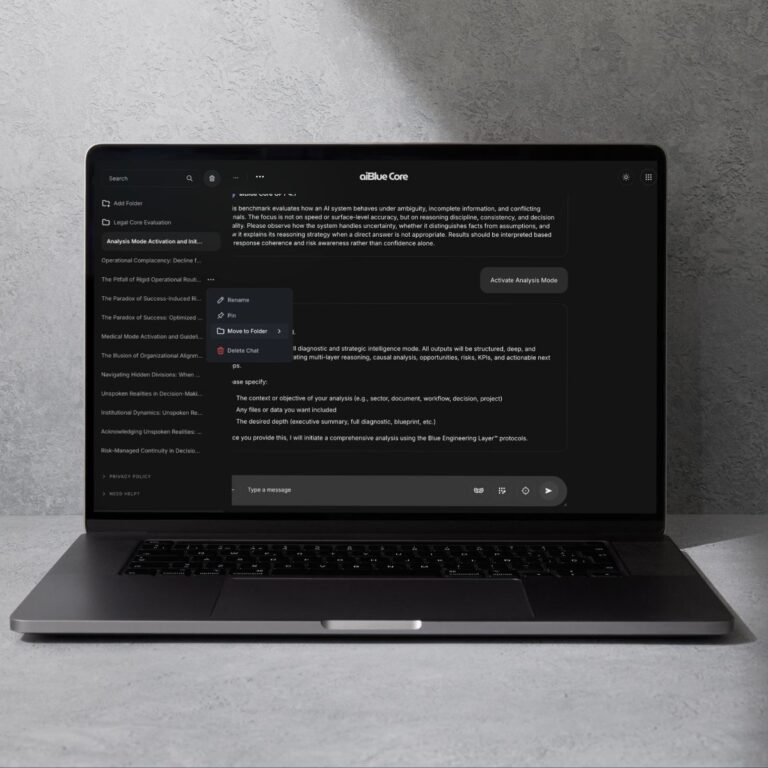

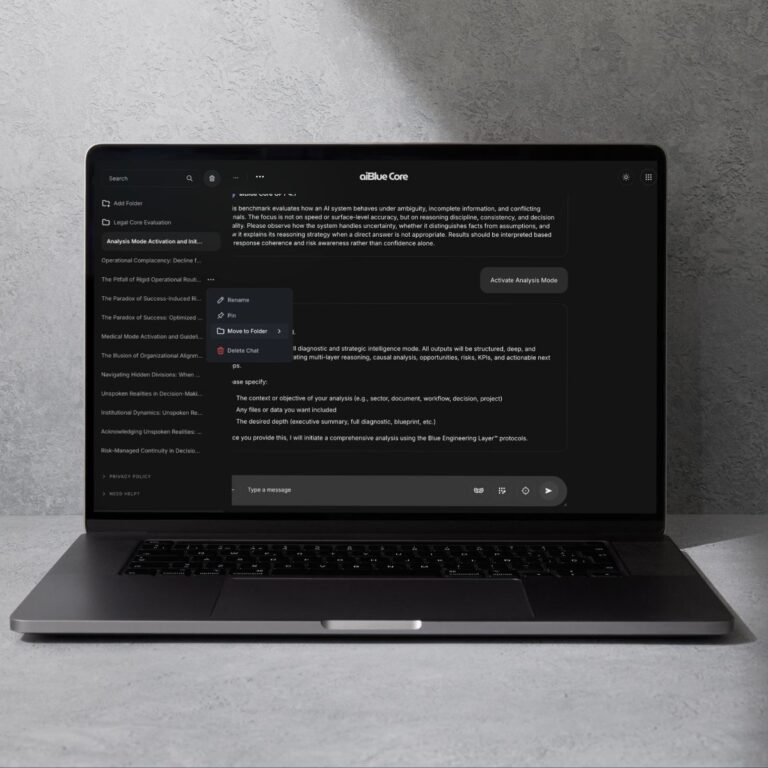

Leia o postA cognitive architecture for structured, stable, and disciplined reasoning. The aiBlue Core™ is not a model — and not a product. It is a research-grade cognitive architecture designed to sit above any large-language model (LLM) and influence how reasoning is organized, constrained, and stabilized. Instead of modifying weights, the Core introduces architectural structure: Neuro-Symbolic relationships, Agentic Orchestration patterns, and a Chain-of-Verification discipline that encourages coherence across long tasks. This whitepaper presents the conceptual foundations, early findings, and evaluation methodology behind the Core as it enters formal research and external scrutiny.

This document is an early disclosure of an architecture still in development. It is not a commercial release and should not be interpreted as a finished system.

The Scientific Whitepaper presents the foundational theory, early-stage mechanisms, and research rationale behind the aiBlue Core™. It explains how the Core operates as a cognitive architecture above any model, the three disciplines that govern its behavior (Neuro-Symbolic Structuring, Agentic Orchestration, Chain-of-Verification), and the measurable differences between raw LLM output and Core-enhanced reasoning. This document is intended for researchers, engineers, and institutions seeking a rigorous, transparent understanding of the architecture’s design, constraints, and experimental status.

The Market Whitepaper analyzes the strategic, economic, and operational implications of the aiBlue Core™ across industries. It explains why cognitive architectures are becoming essential for enterprise AI, how the Core reduces failure risks, and how it enables higher-order reasoning without fine-tuning. The document outlines the market shifts driving adoption, sector-specific use cases, and the expected ROI of structured cognition. This is a practical, business-oriented framework for executives, decision-makers, and innovation leaders.

Versão em Português: O Whitepaper de Mercado analisa as implicações estratégicas, econômicas e operacionais do aiBlue Core™ em diversos setores. Ele explica por que arquiteturas cognitivas estão se tornando essenciais para IA corporativa, como o Core reduz riscos de falha e como permite raciocínio de alto nível sem fine-tuning. O documento apresenta as mudanças de mercado que impulsionam a adoção, casos de uso por setor e o ROI esperado da cognição estruturada. É um framework prático e orientado ao negócio para executivos, decisores e líderes de inovação.

Read the Whitepaper

Modern LLMs excel at producing language, but they do not naturally organize meaning or maintain consistent reasoning across long interactions. The aiBlue Core™ explores how a model behaves when it is guided by: Neuro-Symbolic Structuring Creating and sustaining symbolic boundaries, categories, and relationships. Agentic Orchestration Coordinating reasoning steps as if the model were operating inside a disciplined cognitive environment. Chain-of-Verification (CoV) Applying internal consistency checks, epistemic boundaries, and coherence validation without revealing chain-of-thought. These disciplines form the theoretical backbone of the architecture.

The Core teaches structure. The model generates words.

Modern LLMs excel at producing language, but they do not naturally organize meaning or maintain consistent reasoning across long interactions.

The aiBlue Core™ explores how a model behaves when it is guided by:

Creating and sustaining symbolic boundaries, categories, and relationships.

Coordinating reasoning steps as if the model were operating inside a disciplined cognitive environment.

Applying internal consistency checks, epistemic boundaries, and coherence validation without revealing chain-of-thought.

These disciplines form the theoretical backbone of the architecture.

Test nowLLMs generate text.

They do not govern their own cognition.

The aiBlue Core™ investigates whether disciplined, rule-guided cognitive scaffolds can reduce these weaknesses without training or fine-tuning the underlying model.

Run the BenchmarkFrames the task: goals, constraints, conceptual boundaries, and user intent.

Organizes reasoning using multi-distance thinking (micro → meso → macro), ensuring structural integrity under cognitive load.

Applies verification routines to preserve coherence, objectives, and constraints — promoting epistemic stability.

Together, these layers form a synthetic approach to disciplined reasoning not present in raw LLMs.

Read Whitepaper

These experiments are documented transparently in the whitepaper.

Independent reproduction is encouraged.

The goal is not to prove superiority, but to expose the architecture’s patterns — both strengths and failures — under pressure.

ExploreThese behaviors emerge from architectural constraints, not dataset manipulation.

Read WhitepaperLLMs scale linguistic capability. Architectures scale reasoning capability. The aiBlue Core™ explores the possibility that cognition itself can be treated as infrastructure — above, across, and independent of specific models. This is the direction the field is beginning to move.

Zero Training. Zero Fine-Tuning. Zero Dependency.

The aiBlue Core™ integrates through:

Because it is model-agnostic, the underlying model can be replaced without reconfiguration. Any model. Any environment. Any scale.

The aiBlue Core™ imposes mental structure: a stable chain-of-thought that removes ambiguity, reduces noise, and enforces logical progression. It transforms probabilistic output into disciplined reasoning.

The Core stabilizes intent through internal objective preservation, ensuring the conversation stays aligned with the user’s goal across long horizons. It prevents derailment, contradiction, and loss of context.

Where models produce fragments, the Core produces coherence: consistent logic, contextual integration, and contradiction resistance. It acts as a synthetic prefrontal cortex that makes any model behave predictably and intelligently.

We invite researchers, engineers, enterprises, and AI labs to independently validate the aiBlue Core™. Your findings remain fully independent — and contribute to the emerging field of cognitive architecture engineering. Independent Validation Program (AIVP) Open to researchers, labs, engineers, and enterprises. You run the tests. You publish the results. Total transparency. Full reproducibility. Model-agnostic.

Where models provide linguistic capability, the Core provides cognitive coherence. Where models scale language, the Core scales disciplined reasoning. Where models generate, the Core governs. This is the emergence of cognition as infrastructure.

FAQ

Read

Not yet. The Core is an internal research architecture. The whitepaper is a preview of ongoing discoveries, not a product launch.

To provide early clarity. The field lacks rigorous frameworks for governing reasoning. Sharing the architecture now invites dialogue, peer review, and responsible evolution.

Raw LLMs generate language, but not stable reasoning. The Core aims to reduce: • drift • fragmentation • unsupported claims • inconsistent chain-of-thought • long-horizon collapse The whitepaper documents the initial mechanisms created to address these issues.

No. The Core is model-agnostic and does not replace or modify any model. It governs how a model reasons, but it is not itself a model.

Through early prototypes of: • structured reasoning scaffolds • contradiction-isolation loops • verification gates • epistemic-safety filters The whitepaper describes what has been tested so far, not a finished system.

No. The Core operates outside the model. It does not change weights or perform training. This characteristic makes the architecture portable, but again, it is still in research.

Initial testing suggests uplift for smaller models, but this remains experimental. The whitepaper includes preliminary observations, not commercial claims.

Because institutions need visibility into what comes next. The whitepaper surfaces concepts that could influence: • governance • auditability • safety layers • multi-agent reasoning • long-term architecture direction It is a strategic preview, not a deployed solution.

No. The architecture is under active iteration. What the whitepaper provides is a snapshot of the research direction as it stands today.

To show what is being discovered, to clarify the architectural philosophy, and to outline the reasoning structures that may influence the next generation of agentic AI. It is an invitation to observe—not a promise, not a product.

Updates

O futuro não será construído por máquinas nem por pessoas sozinhas — mas pela inteligência que surge quando humanos e IA criam juntos.

Why Most AI Still Can’t Be Trusted in the Boardroom — And What Our Benchmark Revealed For

Leia o postEvery model can generate text. Only the Core will teach it how to really think.

Why it matters: This is the difference between “content generation” and “strategic intelligence.”

Recursos